I. Introduction

NetaYume Lumina is a text-to-image model fine-tuned from Neta Lumina, a high-quality anime-style image generation model developed by Neta.art Lab. It builds upon Lumina-Image-2.0, an open-source base model released by the Alpha-VLLM team at Shanghai AI Laboratory.

Key Features:

High-Quality Anime Generation: Generates detailed anime-style images with sharp outlines, vibrant colors, and smooth shading.

Improved Character Understanding: Better captures characters, especially those from the Danbooru dataset, resulting in more coherent and accurate character representations.

Enhanced Fine Details: Accurately generates accessories, clothing textures, hairstyles, and background elements with greater clarity.

II. Information

For version 1.0:

This model was fine-tuned from the NetaLumina model, version

neta-lumina-beta-0624-raw, using a custom dataset consisting of approximately 10 million images. Training was conducted over a period of 3 weeks on 8× NVIDIA B200 GPUs.

For version 2.0:

This version has 2 versions:

Version 2.0:

I switched the base model to Neta Lumina v1 and trained this model on my custom dataset, which consists of images sourced from both e621 and Danbooru. The dataset is annotated with a mix of languages: 30% of the images are labeled in Japanese, 30% in Chinese (50% using Danbooru-style tags and 50% in natural language), and the remaining 40% in natural English descriptions.

For annotations, I used ChatGPT along with other models capable of prompt refinement to improve tag quality. Additionally, instead of training at a fixed resolution of 1024, I modified the code to support multiscale training, dynamically resizing images between 768 and 1536 during training.

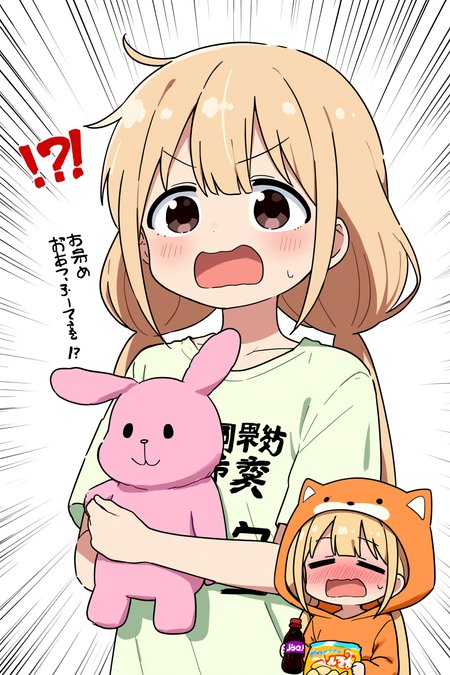

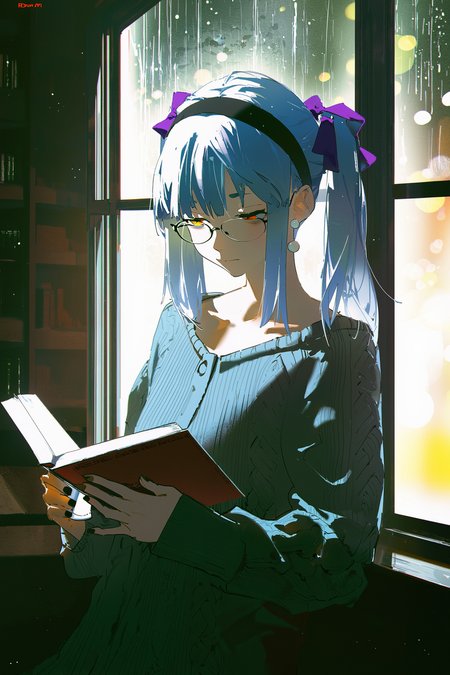

Notes: Currently, I've only evaluated this model using benchmark tests, so its full capabilities are still uncertain. However, based on my initial testing, the model performs quite well when generating images at a resolution of 1312x2048 (as shown in the sample images I provided).

Moreover, this version the model generates images with the size up to 2048x2048 based on my testing.

Version 2.0 plus:

This model is fine-tuned from version 2.0, which had been trained on a dataset of higher-quality images. In this dataset, each image is annotated with both natural language descriptions and Danbooru-style tags.

The training procedure follows the same overall design as version 2, but is divided into three stages.

In the first two stages, the top 10 layers are frozen, and training is performed separately on the Danbooru-labeled subset and the natural language-labeled subset.

In the final stage, all layers are unfrozen and optimized jointly on the full dataset, which incorporates both Danbooru and natural language annotations.

This version reduces the issue of generated images exhibiting an artificial or 'AI-like' appearance, while also improving spatial understanding. For instance, the model is able to generate images in which a character is positioned on the left or right side of the images according to the prompt (as illustrated in the example). In addition, it provides modest improvements in rendering artist-specific styles.

You can find gguf quantization at here: https://huggingface.co/Immac/NetaYume-Lumina-Image-2.0-GGUF

Version 3.0:

This version introduces new character knowledge and also improves some existing characters that could not previously be generated (I will provide a list of the improved characters later). However, please note that not all characters in the list may be generated, since I aim to preserve the old knowledge while also enhancing aspects like text rendering, anatomy (when using artist styles, the model may sometimes produce inaccurate or imperfect anatomy), model stability, and some additional secret improvements.

For generating text within the images, I recommend using this system prompt: "You are an image generation assistant if the prompt includes quoted or labeled on image text render it verbatim preserving spelling punctuation and case. <Prompt Start>", it may help you achieve better results.

Here is a link to a gallery of example images generated in an artistic style using this version: Artist Style Gallery. Thank @LyloGummy for contributing.

For version 3.5 (pre-trained model):

This version is a pre-trained model (I’m not sure what to call it, but it’s basically a continuation of the previous work by the Neta team, using the Neta Lumina v1.0 model). To clarify further, versions 2.0 Plus and 3.0 were fine-tuned from this pre-trained model. My workflow involves using the best checkpoint from this pre-trained model at that time and fine-tuning it.

In this version, I also updated my dataset (only the Danbooru dataset, up to date at 12:00 a.m. on September 3). The new dataset only contains tags, since I don’t have anyone to help me validate natural prompts.

Basically, I didn’t change the dataset too much I just updated it with the latest data, using a part of dataset from neta team and merged it with the previous one. So, the model still generates images that look quite similar. However, if you use the correct trigger prompts, the outputs will differ. The good news is that it still retains all of its previous knowledge accurately (some antistyle has been improved).

In addition, the default style of model currently is stable, the anatomy and text generation seems better than previous.

Lastly, this model is different from the test version I released on Hugging Face.

Here is the diffusers format for this version: duongve/NetaYume-Lumina-Image-2.0-Diffusers-v35-pretrained · Hugging Face

For version 4.0:

In this version, I changed the way I annotate the dataset. Instead of using only tags and natural language, I now use both unstructured and structured annotations for each image. In addition to tags and natural-language descriptions, I added JSON and XML formats. For the tag, JSON, and XML formats (in natural and tag format), I also shuffle the annotations. For example, in the XML format similar to JSON when formatted as tags:

<tags>

<characters>kubo nagisa</characters>

<general>long hair, purple hair, purple eyes</general>

</tags>During preprocessing for each epoch, when this XML annotation is encountered, I randomly drop individual tags such as “purple hair” or other character-related attributes with some probability. I also shuffle the fields, so for example, the

<general>field may appear before the<characters>field.In this version, I also updated my dataset. It now includes the Danbooru dataset up to October 10, 2025. However, ten days ago, I also made an additional update by adding a small dataset during the period when I had paused the training process.

In this version, I reduced AI artifacts and improved the character anatomy. It’s still not perfect, but when you use natural language in the prompt combined with a suitable negative prompt, the results are noticeably better.

Note: All previous knowledge is still retained, you just need to use the correct trigger tags or prompts. Additionally, the current default style is set to anime for greater stability.

III. Model Components:

Text Encoder: Pretrained Gemma-2-2B

VAE: From Flux.1 dev's VAE

Image Backbone: Fine-tuned version of NetaLumina's backbone

IV. File Information

This all-in-one file includes weights for VAE, text encoder, and image backbone. Fully compatible with ComfyUI and other systems supporting custom pipelines.

If you only want to download the image backbone, feel free to visit my Hugging Face page, it includes the separated files along with the

.pthfiles in case you want to use them for fine-tuning.

V. Suggestion Settings

For more details and to achieve better results, please refer to the Neta Lumina Prompt Book.

VI. Notes & Feedback

This is an early experimental fine-tuned release, and I’m actively working on improving it in future versions.

Your feedback, suggestions, and creative prompt ideas are always welcome — every contribution helps make this model even better!

VII. How to Run the Model on Another Platform

You can use it through the tensor.art platform. Here is the model link: https://tensor.art/models/898410886899707191

However, to run the model in an optimized way, I recommend using Comfyflow from tensor.art (because its default runner lacks configuration, which makes the model run suboptimally). Here is an example flow you can use on the platform: https://huggingface.co/duongve/NetaYume-Lumina-Image-2.0/blob/main/Lumina_image_v2_tensorart_workflow.json

VIII. Acknowledgments

Big thanks to narugo1992 for the dataset contributions.

Credit to Alpha-VLLM and Neta.art Lab for the fantastic base model architecture.

If you'd like to support my work, you can do so through Ko-fi!