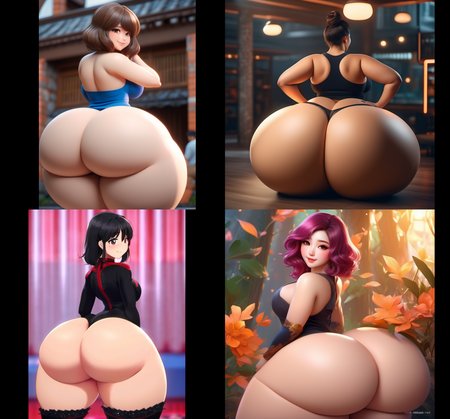

This is a test LoRA of my bottomheavy dataset, but trained on SDXL. I wanted to see how SDXL would handle the same data/config as my last released model.

The results came out better than I expected and it was pretty quick to train compared to SD1, so I'm uploading it just for fun. Still, don't expect to much from this LoRA. This was a low effort training attempt. And I have no idea how to prompt for SDXL yet, so keep that in mind.

See Training Data for tags list

Training Findings:

It seems that SDXL does learn the concept faster than SD1, my last bottomheavy model trained way longer with similar config. 16 epocs vs 160 epocs (but this model is under trained probably)

A lower network_dim seems to work fine, I will probably try even lower than 16 later.

Training per iteration is about ~2-3x slower on SDXL than it was for SD1, but the speed that SDXL learns the concept makes it somewhat competitive.

Didn't necessarily need text encoder training to get decent results. On SD1 I would have to train for much, much longer if I did not use text encoder training. Here it learned the concept pretty well without.

Description

This is a test of SDXL's ability to learn far fetched concepts. This is not up to par with most of my other models, but still wanted to show off what SDXL was capable of learning.

Training Details:

~400 images

14 epocs

batch 2

gradient accumulation 4

learning rate 2e-4

No text encoder

base model SDXL

clip skip 1

random flip

shuffle captions

tag drop chance 0.1

network dropout 0.4

bucketing at 1024

dim 16

alpha 8

conv_dim 8

conv_alpha 4

cosine with restarts

scale weight norm 1