This is a place for experimenting with SD1.5 LoRA.

The main goal is overall enhancement rather than focusing on a single concept.

■Dora_nsfw_remember is intended to complement my test merge model, but since the training is done on NovelAI v1, they should work fine with its derivative models.

my test model: https://civarchive.com/models/1246353/sd15modellab

■nai_v2_highres is a high-resolution stabilizing DoRA for novelai_v2.

Please download the official checkpoint from the URL below.

I’ve also made a safetensors version just in case.

https://huggingface.co/NovelAI/nai-anime-v2

https://civarchive.com/models/1772131

■nai_v2_semi-real is a semi-realistic style DoRA for novelai_v2.

■I use OneTrainer for training and ComfyUI for inference.

■I will share my training settings and inference workflow as much as possible.

■If the prompt is short, the background may become simple or the style may lean toward realism.

By using the uploaded tipo_workflow, you can automatically generate longer prompts—so please give it a try!

■Sometimes saturation occurs due to overfitting and the model’s compatibility. Adjusting DoRA , prompt weight strength, or reviewing the cfg can help improve this.

■There’s also a high-resolution inference workflow using kohya_deep_shrink.

It expands composition and removes the need for high_res_fix.

1152px offers a good balance of quality, stability, and speed, while 1536px is more dynamic and detailed.

By the way, this Dora was created to give SD1.5 a level of concept understanding comparable to my PixArt-Sigma anime fine-tune.

SD1.5 with Dora applied will likely be the most compatible refiner—it's ideal for i2i tasks.

my pixart-sigma finetune.

https://civarchive.com/models/505948/pixart-sigma-1024px512px-animetune

■Please feel free to ask if you have any questions!

日本語での質問も大丈夫ですので気軽にお声がけください!

Description

DoRA (49.11 MB): v05 Dora

Training Data (362.07 MB):ComfyUI_workflow+onetrainer_data+old_epoch_Dora_backup_data

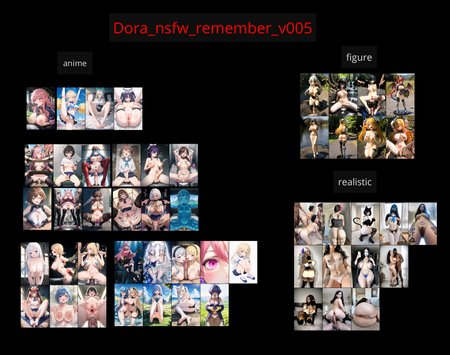

● Trained Dora on a dataset of 400,000 images.

● The goal is to create Dora, which enhances overall tag recognition and quality.

We added 51 more epochs and 605,000 steps of training from version v04.

●The concepts have been further reinforced.

Some tags may show improved reproducibility of characters and concepts.

However, if you seek complete reproducibility, please use this in combination with other LoRAs.

●Similar to v04, it tends to be slightly overfitted, so lowering the DoRA weight to around 0.5 may result in better balance with the base model's style and improved texture.

●Also, a large number of negative prompts, high tag weights, or a high CFG value can sometimes cause artifacts, so it might be a good idea to review those settings as well.Especially with realistic models, these factors can significantly increase the likelihood of visual artifacts.

●Also, earlier epochs from v04–v02 might be more flexible and easier to use in some cases, so it may be worth exploring to find the right balance.

●Generation at 512px is the most stable, but using kohya_deep_shrink to generate at higher resolutions like 768x1152px can sometimes produce more compelling images, so I recommend giving it a try. While it may introduce some slight artifacts, it’s definitely worth experimenting with.

The following text is the same explanation as in the previous versions.

____________________________________________________________________

●increasing the weight of Dora or the tags above 1 is likely to cause instability.

but,In tools like 1111 or Forge, tag weights may be normalized, so increasing them might not cause any issues. I believe ComfyUI also has a custom prompt node that can perform similar normalization.

If you notice too much style influence or visual artifacts even with the weight set to 1, it might be a good idea to try lowering the Dora weight.

The sample images contain many imperfections—that’s the result of a compromise due to the large quantity.

●Dataset Contents

Concept enhancement: 240,000image

Aesthetic enhancement: 130,000image

figure+realistic:30,000image

●This Dora does not have trigger tags. The goal is general concept enhancement, not restricting the original model.

●I aimed to evenly learn as many concepts as possible. On its own, the effect is weak, so using multiple related tags may be necessary.

If the character has long green hair, it's best to include those tags. The same applies to NSFW tags.

● If you want to reinforce specific concepts, adding a single-concept LoRA is recommended.

●There may be occurrences of "censored, mosaic censoring, bar censor, text" but that is because these are included in my dataset. I also include uncensored images, but many images have censorship. You can enjoy them as expressions or spice, or try adding them to negative prompts. It might also be a good idea to add "uncensored" to the prompt, although it might not always resolve the issue.

● Training was done on NovelAI_v1, so it should work with its derivatives.However, some models suffer from concept forgetting due to overfitting on specific concepts or styles. What works in NovelAI may be weaker elsewhere.

●Be careful with negative prompts.

Using terms like "worst quality, low quality:1.4" can easily produce high-quality images, but they may limit diversity.

If special tags like "slime girl" don’t seem to have an effect, the strength of these negative prompts might be too high.

●With short prompts, the background may become more plain, and the style may lean toward realism.

In that case, try adding background tags or including as many details as possible, like clothing and hairstyle, to better define the image you want to generate.

●Only general tags and character tags have been trained.

While the work’s title hasn’t been specifically trained, some character names include the title, so you might get lucky and see some reinforcement.

However, since characters and works were not the focus of the training, they may not be well-learned—so please don’t expect too much. For characters, adding a single-concept LoRA would likely be more effective.

●This LoRA is designed for 512px, so using it at higher resolutions may sometimes cause distortions.Turning off Dora during high-res fix or i2i upscaling may result in clearer images with fewer distortions.

●I'll also share my OneTrainer settings for reference.

This is U-Net-only training. Training with Clip Skip 2. For OneTrainer, it's set to 1.I'll also share my ComfyUI workflow just in case.