March 29th 2024 Update: A more experimental personal merge based off of Fluffusion R3 has been posted. This merge is different from previous versions of this model series. As such it will not be explicitly given that naming scheme. As it's v-prediction, remember the yaml or sampling nodes. Fluffusion is trained with clip skip 2, so make sure you set that in your UI of choice.

Below is information that is outdated. That is all.

Results oversaturated, smooth, lacking detail? No result at all? Please read the following!

This is a terminal-snr-v-prediction model and requires a configuration file placed alongside the model and the CFG Rescale webui extension! See further down for more information and equivalent for ComfyUI.

HOW TO RUN THIS MODEL

- This is a terminal-snr-v-prediction model and you will need an accompanying configuration file to load the model in v-prediction mode. You can download it in the files drop-down and place it in the same folder as the model.

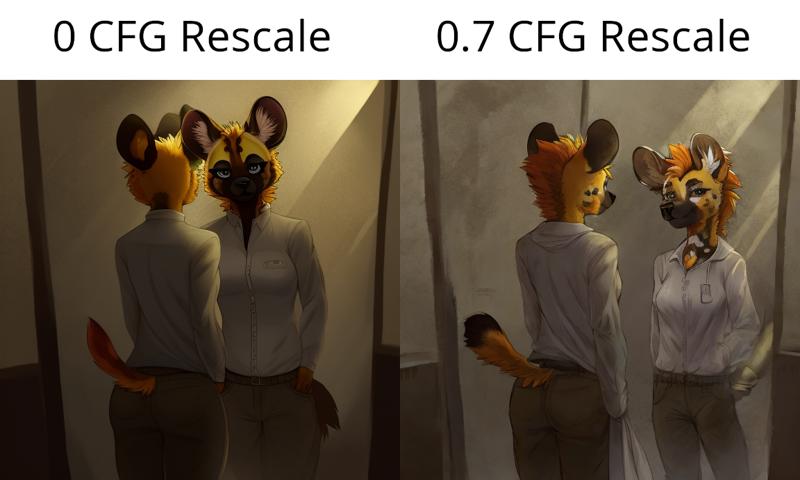

- You will also need https://github.com/Seshelle/CFG_Rescale_webui. This extension can be installed from the Extensions tab by copying this repository link into the Install from URL section. A CFG Rescale value of 0.7 is recommended by the creator of the extension themself. The CFG Rescale slider will be below your generation parameters and above the scripts section when installed. If you do not do this and run inference without CFG Rescale, these will be the types of results you can expect per this this research paper.

- If you are on ComfyUI, you will need the sampler_rescalecfg.py node from https://github.com/comfyanonymous/ComfyUI_experiments.

HOW TO PROMPT THIS MODEL

- Natural language has a strong impact on this model as of V7. It won't understand everything but it can get you places. And then when you can't squeeze any more out of it, you can use e621 tags to help refine your prompt. (or you can just use tags, either way will work.)

- Start your prompt with a short natural language prompt describing what you want, then pad it with e621 tags to refine specific concepts. As this is a v-prediction model, prompt interpretation can be a bit more literal.

- Many flavor words and artists from SD 1.5 work again.

- PolyFur is trained on MiniGPT-4 captions, so try being really flowery with your prompts and use sentences even. (check the generation info on the preview images to see how)

- If an established character isn't coming out accurately, try increasing the strength of their token and adding a few implied tags that describe their appearance. Keep in mind that characters that aren't very popular or don't have many images in FluffyRock's dataset typically won't fare that well without a LORA.

- Avoid weighting camera angle keywords too strongly, especially close-up.

- Resolutions between 576 and 1088 should work reasonably well as that is the range of FluffyRock.

Model Info and Creation Process

V8.1 now available. Last major version for a while. Currently the quality tag LORA from Feffy is not baked in and I recommend using it as a LORA. As of this V4-V6, @Feffy's Quality Tag LORA was baked into the FluffyRock part of this model. Add masterpiece, best quality and worst quality, low quality, normal quality to your positive/negative prompt.

This model is a "Train Difference" mix (see https://github.com/hako-mikan/sd-webui-supermerger/blob/main/calcmode_en.md#traindifference) of FluffyRock and PolyFur onto a diverse and versatile base model to expand the text encoder's range of prompting and understanding of concepts outside of e621 tags. This process differs from traditional merging and block merging and shouldn't be confused with "Add Difference" that we've had. The current base model is a mix of 6 non-furry models at varying ratios, which are mostly merges as well that cover a lot of ground in terms of prompting capability. As of V6 the process has gotten more intricate with CLIP tweaks and block merging.

Credits go to Lodestone Rock and company for training such an expansive model. https://civarchive.com/models/92450?modelVersionId=124661 https://huggingface.co/lodestones/furryrock-model-safetensors/tree/main

And pokemonlover69 over at https://civarchive.com/models/124655?modelVersionId=136127 for humoring my insanity on this process.

And Feffy for their Fluffyrock Quality Tags LORA. https://civarchive.com/models/127533

Description

Experimental personal merge based on Fluffusion R3. Use the yaml and clip skip 2.

Details

Files

easyfluff_5000DefaultvaeFFR3CS2.yaml

Mirrors

subfurryanalog_v22.yaml

beanv6flufusv2_v10.yaml

9thTail_juicyV03.yaml

9thTail_mainV01.yaml

steinmix_alpha5.yaml

steinmix_alpha6.yaml

subfurryanalog_v20.yaml

furationBETA_rebootedVPDModelbaseF.yaml

yiffymix_v44.yaml

SD15ColorsplashV_v01.yaml

yifurr_cid4.yaml

yifurr_2.yaml

SD15ColorsplashV_v012.yaml

9thTail_mainV03.yaml

fluffyrock_e184VpredE157.yaml

indigoFurryMix_se02Vpred.yaml

steinmix_alpha3.yaml

9thTail_mainV02.yaml

fluffyrockanimedilut_v10.yaml

furationBETA_rebootedREFINEDVPREDF.yaml

yifurr_c3.yaml

subfurryanalog_v10.yaml

steinmix_charlie2.yaml

fluffyrockE6LAION_e98E6laionE47.yaml

easyfluff_5000DefaultvaeFFR3CS2.yaml

9thTail_altV03.yaml

indigoFurryMix_se01Vpred.yaml

yifurr_newmoone2.yaml

9thTail_softV01.yaml

fluffyrock_e257VpredE11.yaml

pegasusmix_VPredV2.yaml

pegasusmix_VPredV1.yaml

steinmix_alpha6bL.yaml

fluffyrock_e233VpredE206.yaml

yiffyfluffybutts_V061.yaml

yiffymix_v43.yaml

yiffymix_v42.yaml

yiffymix_v41.yaml

yiffymix_v40.yaml

yifurr_sunrise.yaml

yifurr_sun.yaml

yifurr_cid.yaml

furationBETA_rebootedVPREDPartF.yaml

fluffyrockUnleashed_baseV10.yaml

SD15ColorsplashV_v013.yaml

SD15ColorsplashV_v011.yaml

9thTail_softV03.yaml