FLAN-T5-XXL (Text-Encoder Only)

The FP8 and GGUF format is distributed as a compressed ZIP file. Please unzip it using any decompression software of your choice before use, or download from Hugging Face page.

FLAN-T5-XXL is a fine-tuned version of T5-XXL v1.1, designed to improve accuracy and performance.

The original FLAN-T5-XXL model is available on Google's Hugging Face page.

When used with Flux.1, SD3.5 and HiDream, replacement for T5-XXL v1.1 to FLAN-T5-XXL offers improved prompt comprehension and enhanced image quality.

This model has been streamlined by extracting only the text encoder portion, making it optimized for image generation workflows.

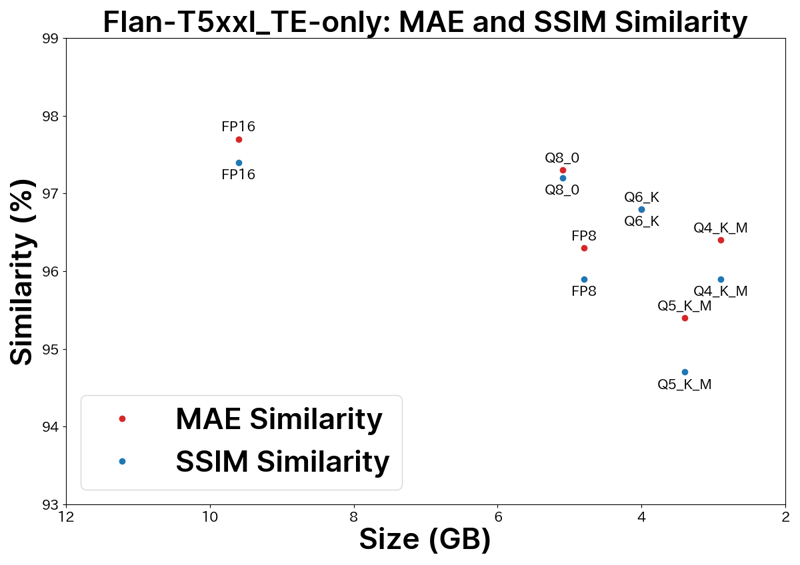

Model Variants

+------------+--------+----------+-----------+

| Flan-T5xxl | Size | Accuracy | Reccomend |

+------------+--------+----------+-----------+

| FP32 | 19 GB | 100.0 % | 🌟 |

| FP16 | 9.6 GB| 98.0 % | ✅ |

| FP8 | 4.8 GB| 95.3 % | 🔺 |

| Q8_0 | 5.1 GB| 97.6 % | ✅ |

| Q6_K | 4.0 GB| 97.3 % | 🔺 |

| Q5_K_M | 3.4 GB| 94.8 % | |

| Q4_K_M | 2.9 GB| 96.4 % | |

+------------+--------+----------+-----------+

Usage Instructions

Place the downloaded model files in one of the following directories:

models/text_encodermodels/clipModels/CLIP

Select this model in place of the standard T5-XXL v1.1 model in your workflow.

FP32 format

The FP32 format provides the highest image quality.

Stable Diffusion webUI Forge

To use the text encoder in FP32 format, launch Stable Diffusion WebUI Forge with the --clip-in-fp32 argument.

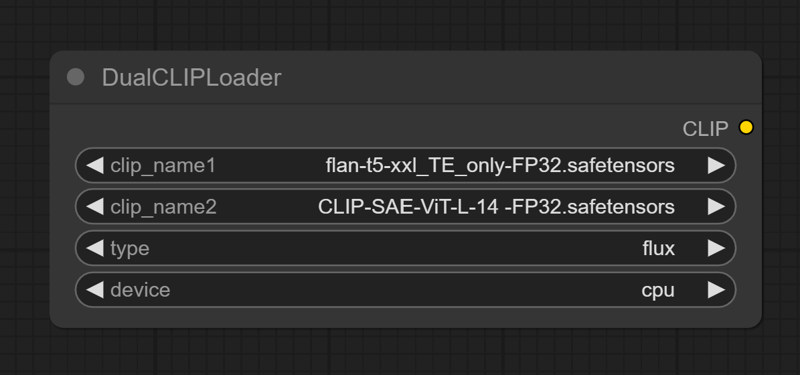

ComfyUI

You can also use FP32 text encoders for optimal results by enabling the --fp32-text-enc argument at startup.

GGUF format in ComfyUI

As of April 13, 2025, ComfyUI's default DualClipLoader node includes a device selection option, allowing you to choose where to load the model:

cuda→ VRAMcpu→ System RAM

Since Flux.1’s text encoder is large, setting the device to cpu and storing the model in system RAM often improves performance.

Unless your system RAM is 16GB or less, keeping the model in system RAM is more effective than GGUF quantization. Thus, GGUF formats offer limited benefits in ComfyUI for most users due to sufficient RAM availability.

For running Flux.1 in ComfyUI, use the FP16 or FP32 text encoder.

Comparisons

Tip: Upgrade CLIP-L Too

For even better results, consider pairing FLAN-T5-XXL with an upgraded CLIP-L text encoder:

LongCLIP-SAE-ViT-L-14 (ComfyUI only)

Combining FLAN-T5-XXL with an enhanced CLIP-L model can further boost image quality.

License

This model is based on Google's FLAN-T5-XXL, also licensed under Apache 2.0.

Update History

August 22, 2025

Add Why Use FP32 Text Encoder?

July 24, 2025

Re-upload of the GGUF model, reduction in model size, and correction of metadata.