There are versions of this LoRA for different base models, make sure you use the right one.

2024-08-18: Flux version here: https://civarchive.com/models/660521/wetting-self-omorashi-concept-flux

Update 2024-07-29: New versions

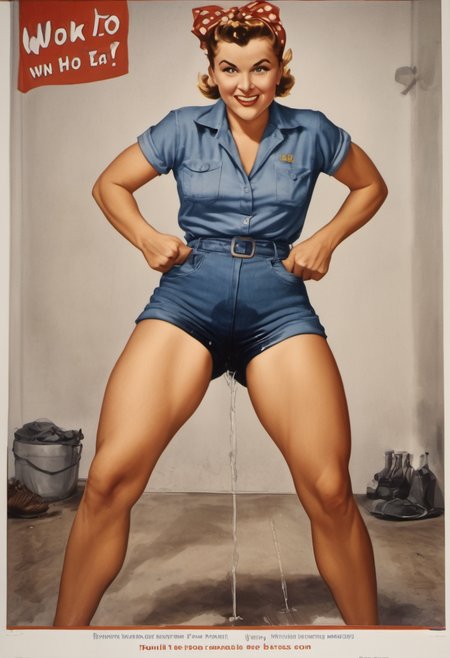

v0.1 for Base SDXL - use with base SDXL 1.0 or similar (e.g. Juggernaut). This can potentially do a lot more things than the Pony version, but is trickier to work with. I'm curious to hear any feedback.

v1.1 for PonyV6 - minor improvement in terms of aesthetics and prompt adherence due to dataset curation; in a blind test against v1.0 over 100 samples, it won 64, lost 25, tied 11.

Trigger words

These were used during training: (the latter three are undertrained)

omo wet pants

omo wet panties

omo wet shorts

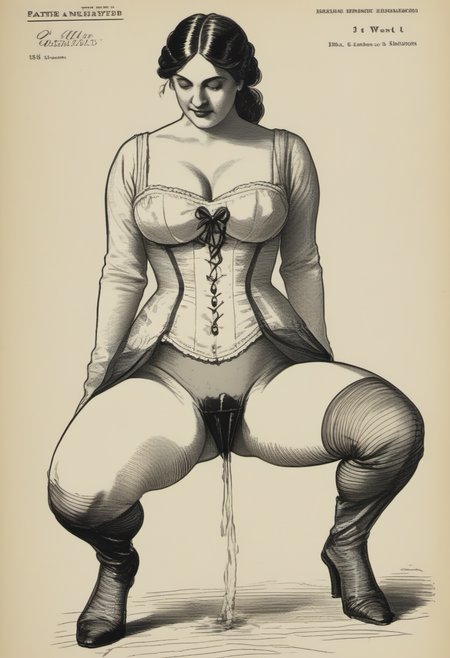

omo wet pantyhose

omo wet skirtI did not train any new tags for poses, though Pony already knows some like "v arms" or "covering crotch" that may be useful and appeared during training.

The tag "peeing" may be useful if you want there to be a visible pee stream but is more or less implied with "omo wet panties".

The base SDXL version was trained with a mix of booru tag captions and natural language captions, so you do not necessarily need to use the trigger words for that version.

Recommended settings

The Pony version should work with any Pony checkpoint you like. If you want a recommendation:

Realism: Jib Mix Pony Realistic, Satpony Noscore, Real Dream v7

Artistic: Autismmix, Zavy Fantasia, Rainpony, or even just base Pony

For base SDXL, I have only tried a few checkpoints, including Juggernaut and Realistic Stock Photo.

CFG ideally between 1.5 and 3.5

Lora strength 1.0 if used on its own at recommended CFG

Training details

(These were training details for v1.0, following versions were similar)

Trained for 30 epochs at (nominally) 3e-5 LR with AdamW with 0.02 weight decay over around 1400 steps per epoch at minibatch size 1 and 48 gradient accumulation steps and a cosine annealing LR scheduler with 2000 warmup samples.

Training was done on a 4090 as a full-rank finetune in bf16 with a special "Fine Tune Offset" mode in a modified OneTrainer so that weight decay could go toward the base Pony model. The LoRA was extracted afterward.

Each image had its own computed loss weight, fractional repeats value, and timestep bias based on quality, aesthetics, and relevance, rounded into concept buckets. For example, low quality photographs were biased toward high timestep numbers to avoid learning details (custom OneTrainer modification). The average effective LR was around 2e-5 and the total unique images in the dataset was around 1700.

The dataset was comprised of both real photographs and synthetic images I generated with earlier versions of this model. The synthetic images were only used at high timesteps for composition learning, and all hands were masked out of synthetic images. The purpose of including synthetic images was for "style regularization", many different styles were included in hopes of avoiding a photographic bias in the LoRA.

Natural language captions for the base SDXL version were generated with CogVLM being strongly guided by the system prompt.

Known issues

The dataset contained only female subjects. Mostly 1girl, some 2girls.

The sample images are a bit cherry picked to avoid cases where it messes up faces. You'll probably want to do a face detailer pass.

It is undertrained especially on pantyhose and some skirt poses, for example squatting in a pencil skirt. For shorts, it seems to get confused about pee streams and threads hanging off of cutoff jean shorts.

If the wet spot on pants is too shiny or paint-like, you can try putting "wet" in the negative, but I would recommend trying to fix this with sampler settings instead.

Especially at high CFG, puddles may be very yellow, you can try "yellow pee" in the negative.

Depending on checkpoint, there may be a bias toward older faces than the typical Pony sameface, or at least a bias toward nasolabial folds. This was mostly intentional.

Future work

I am still looking at training this LoRA for other types of models, and I am interested in trying out some types of preference optimization training strategies.