The LoRa was trained on just two single transformer blocks with a rank of 32, which allows for such a small file size to be achieved without any loss of quality.

Since the LoRa is applied to only two blocks, it is less prone to bleeding effects. Many thanks to 42Lux for their support.

Description

Details

Downloads

161

Platform

CivitAI

Platform Status

Deleted

Created

9/4/2024

Updated

7/6/2025

Deleted

5/23/2025

Trigger Words:

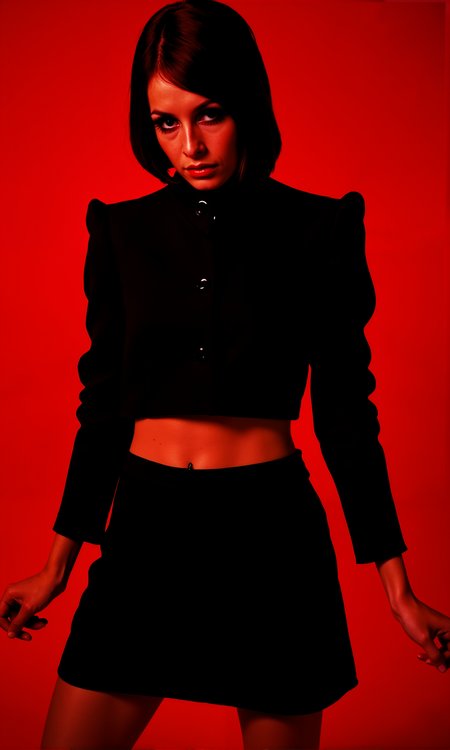

Twiggy

Files

twiggy_v2_400step_flux_lora.safetensors

Mirrors

CivitAI (2 mirrors)

Other Platforms (TensorArt, SeaArt, etc.) (1 mirrors)

twiggy_v2_400step_flux_lora (1).safetensors

Mirrors

CivitAI (2 mirrors)

Other Platforms (TensorArt, SeaArt, etc.) (1 mirrors)