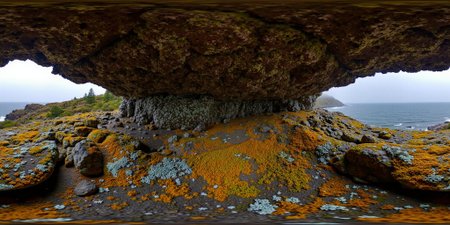

This is a LoRA for FLUX.1 Dev which aims to improve the quality of equirectangular 360 degree panoramas, which can be viewed as immersive environments in VR or used as skyboxes.

Updated example workflow with some more notes here: https://civarchive.com/models/745010?modelVersionId=833115

Information

The trigger phrase is "equirectangular 360 degree panorama". I would avoid saying "spherical projection" since that tends to result in non-equirectangular spherical images. Image resolution should always be a 2:1 aspect ratio. 1024 x 512 or 1408 x 704 work quite well and were used in the training data. 2048 x 1024 also works. I suggest using a weight of 0.5 - 1.5. If you are having issues with the image generating too flat instead of having the necessary spherical distortion, try increasing the weight above 1, though this could negatively impact small details of the image. For Flux guidance, I recommend a value of about 2.5 for realistic scenes.

This is a tool which can be used to view spherical images in a browser: https://renderstuff.com/tools/360-panorama-web-viewer/

To have the image be viewable with an interactive mode like this on websites that support it, you can add the equirectangular projection metadata using exiftool (on Windows) by using a command like this:

path\to\exiftool.exe -XMP:ProjectionType="equirectangular" image.png

Civitai VR native support when? :)

If you have a VR system, you can view equirectangular images as immersive environments in many different VR media players like SteamVR Media Player, Deo VR, etc.

You can also use a Stereo Image Node with a depth map to create a stereo panorama image (with a 4:1 aspect ratio) which can be viewed in VR to have the objects actually appear with the distance determined by the depthmap. I've gotten good results using a standard MiDaS depthmap and the polylines_sharp setting for the fill technique on the Stereo Image Node. Other depthmap methods might provide better results; I'm not sure whether there is a depth model designed for equirectangular panoramas.

Compatibility with other LoRAs seems pretty good, though I haven't tested very many. It works quite well with the dev-to-schnell LoRA if you want faster generation times (about 8-10 steps instead of 20-30), at the cost of making the textures a bit less realistic and a bit more cartoony.

This model has rank 32 and was trained for 24 epochs on 128 training images (3072 steps). This was the point at which it seemed to converge on understanding the equirectangular format, and further training did not seem to help. For captions, I used detailed captions generated by JoyCaption with a bit of manual editing along with some basic information about the structure of the equirectangular projection. Trained using AI Toolkit.

Limitations

This model doesn't fully fix the seam issue. Since Flux is a transformer model, we can't just use asymmetric tiled sampling with clever padding tricks like we could with convolutional models. On the other hand, since Flux is a transformer model, it's able to naturally have long-range attention that causes it correlate the opposite sides of the image, and this attention can be trained.

However, this model does greatly improve the seams in most cases, enough that inpainting the seam after applying a circular shift to the image is usually enough to fix it, in the cases where the objects on either side of the seam aren't completely incompatible. I have provided an example workflow for fixing this seam issue.

Since the majority of the training data consisted of panoramas of landscapes, the model is very good at these (and base Flux isn't as bad at them either). However, indoor scenes had very little representation in the training data. As a result, proportions are often far off for indoor scenes, making objects appear far larger than they should, rooms might not have the correct number of walls, faces may be distorted, etc. I'd like to retrain with a better balance of scenes at some point.

Attribution

This LoRA was trained on equirectangular images freely available online, primarily from the Flickr group for equirectangular panoramas, since it has high quality photos without watermarks. I used the following users' images: j.nagel, Kevin Jennings, Uwe Dörnbrack, Cristian Marchi, Patricia Müller, Tiger Lin Panowork, and Faillace. I'd like to thank them for their excellent photography that makes this work possible. It's highly unlikely that any images substantially similar to the ones used in the training set will show up in outputs of this model, though, and really they were just examples demonstrating how the spherical lens distortion affects images.

Since this model was built on FLUX.1 Dev, images generated with this model still must follow FLUX.1 Dev Non-Commercial License.

Description

Rank 32, should preserve fine details better