I am sharing how I trained this model with full details and even the dataset: please read entire post very carefully.

This model is purely trained for educational and research purposes only for SFW and ethical image generation.

The workflow and the config used in this tutorial can be used to train clothing, items, animals, pets, objects, styles, simply anything.

The uploaded images have SwarmUI metadata and can be re-generated exactly. For generations FP16 model used but FP8 should yield almost same quality. Don't forget to have used yolo face masking model in prompts.

How To Use

Download model into LoRA folder of the SwarmUI. Then you need to use Clip-L and T5-XXL models as well. I recommend T5-XXL FP16 or Scaled FP8 version.

A newest fully public tutorial here for how to use :

I have trained both FLUX LoRA and Fine-Tuning / DreamBooth model.

Activation token / trigger word : ohwx man

Each training was up to 200 epochs and once every 10 epoch checkpoints saved and shared on below Hugging Face Repo : https://huggingface.co/MonsterMMORPG/Model_Training_Experiments_As_A_Baseline

This model contains experimental results comparing Fine-Tuning / DreamBooth and LoRA training approaches.

Additional Resources

Installers and Config Files : https://www.patreon.com/posts/112099700

FLUX Fine-Tuning / DreamBooth Zero-to-Hero Tutorial : https://youtu.be/FvpWy1x5etM

FLUX LoRA Training Zero-to-Hero Tutorial : https://youtu.be/nySGu12Y05k

Complete Dataset, Training Config Json Files and Testing Prompts : https://www.patreon.com/posts/114972274

Click below link to download all trained LoRA and Fine-Tuning / DreamBooth checkpoints for free

https://huggingface.co/MonsterMMORPG/Model_Training_Experiments_As_A_Baseline/tree/main

Environment Setup

Kohya GUI Version:

021c6f5ae3055320a56967284e759620c349aa56Torch: 2.5.1

xFormers: 0.0.28.post3

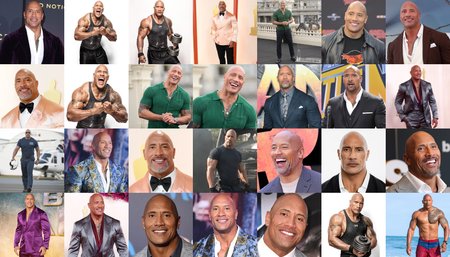

Dataset Information

Resolution: 1024x1024

Dataset Size: 28 images

Captions: "ohwx man" (nothing else)

Activation Token/Trigger Word: "ohwx man"

Fine-Tuning / DreamBooth Experiment

Configuration

Config File:

48GB_GPU_28200MB_6.4_second_it_Tier_1.jsonTraining: Up to 200 epochs with consistent config

Optimal Result: Epoch 170 (subjective assessment)

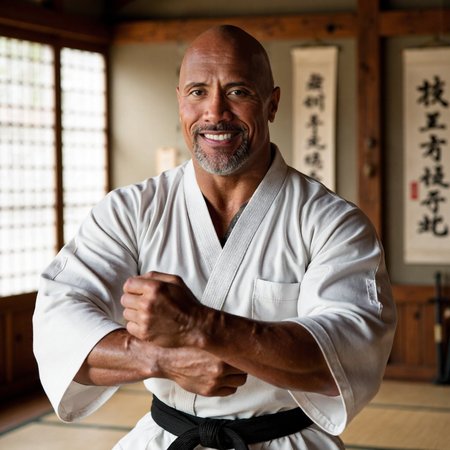

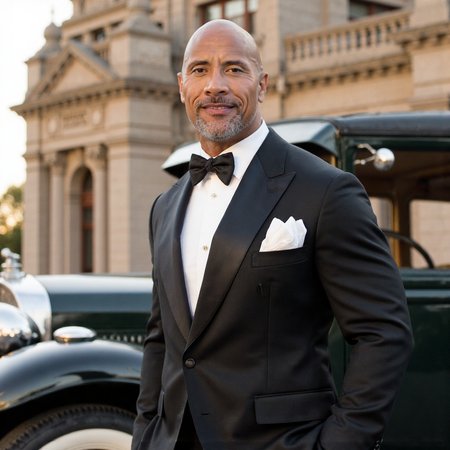

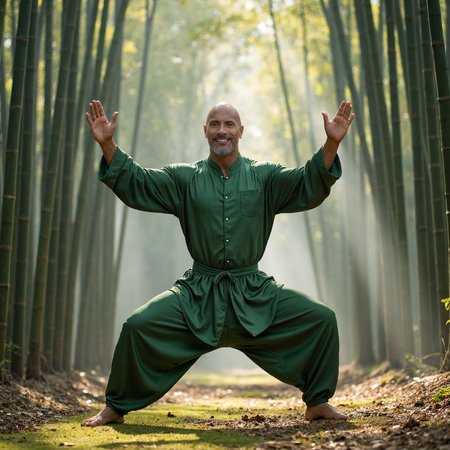

Results

LoRA Experiment

Configuration

Config File:

Rank_1_29500MB_8_85_Second_IT.jsonTraining: Up to 200 epochs

Optimal Result: Epoch 160 (subjective assessment)

Results

Comparison Results

I have tested FP8 vs FP16 vs FP32 LoRA Difference As a Grid

Model Variants Are Also Tested With The LoRA - FP32 Version

FP8 FLUX DEV Base vs FP8 Scaled vs GGUF 8 vs FLUX DEV :

Works best with FP16 DEV base model, then GGUF 8 base model and then FP8 raw base model and FP8 scaled model sometimes works better sometimes worse

Key Observations

LoRA demonstrates excellent realism but shows more obvious overfitting when generating stylized images.

Fine-Tuning / DreamBooth is better than LoRA as expected.

FP8 almost yields perfect quality as FP32 with LoRA

I have used Kohya GUI to convert FP32 saved LoRAs into FP16 and FP8

Here full public article : https://www.patreon.com/posts/115376830

Model Naming Convention

Fine-Tuning Models

Dwayne_Johnson_FLUX_Fine_Tuning-000010.safetensors10 epochs

280 steps (28 images × 10 epochs)

Batch size: 1

Resolution: 1024x1024

Dwayne_Johnson_FLUX_Fine_Tuning-000020.safetensors20 epochs

560 steps (28 images × 20 epochs)

Batch size: 1

Resolution: 1024x1024

LoRA Models

Dwayne_Johnson_FLUX_LoRA-000010.safetensors10 epochs

280 steps (28 images × 10 epochs)

Batch size: 1

Resolution: 1024x1024

Dwayne_Johnson_FLUX_LoRA-000020.safetensors20 epochs

560 steps (28 images × 20 epochs)

Batch size: 1

Resolution: 1024x1024

Description

For Full Details, Training Dataset, Tutorial, Guide, Configs, Training Json Files, Workflows, Installers, Resources and All Checkpoints > https://huggingface.co/MonsterMMORPG/Model_Training_Experiments_As_A_Baseline

This is FP16 converted version of original FP32 training