Project: Touching Grass

Models are overtrained. Styles are fixed. When AI is trained on AI images maybe from another AI which was also trained on AI images and so on and so on. Probabilities will collapse to zero. Details will be lost. Everything will look the same. This is what is happening now.

Time to touch grass.

Versions

(4/15/2025) v0.2:

+30% images. Because there is a bug causing all avif files not being used in v0.1. Which is 30% of the dataset. lol.

Changed some parameters, stronger and cleaner effect.

(4/02/2025) v0.1: init release.

Dataset

Note: This LoRA is trained on a sub dataset of the Stabilizer LoRA. Which means there will be overlapped effect. So it is NOT recommended to use both of them together.

This sub dataset contains ~1K real world S-tier photographs, very diverse and creative, high quality and high resolution. Contains nature, indoors, animals, buildings...many things, except humans. So it does not contain "human" knowledge. And can be used in both 2D anime and 3D realistic models.

Trained with natural captions from LLM. Mainly because WD tagger v3 is really bad at real world images. Also because natural captions have more diverse vocabularies and can avoid overfitting.

How to use

So to put it simply, this LoRA contains diverse knowledge of the real world, minus human. Depends on your usage, it can

bring better photography effects (better lighting, depth of field, etc.),

"fix" the overfitted 2D anime models. It cannot "undo" the training, but can add pixel level natural texture/details, and bring back creativity and chaos to the models.

a so-called "detailer". But instead of amplifying the noise in your base model to generate more objects. This LoRA focuses on natural texture.

significantly improve background structural stability for anime models. Less deformed background, like deformed rooms, buildings. Because that's what the dataset made of. And anime dataset doesn't contain much background and texture knowledge. Even if it has, most of them are abstract art backgrounds and lacking proper tags. So the base model will forget it or learn weird things during training.

Note:

Trained on Illus v0.1. But also works on NoobAI.

Even there is no human data/knowledge in this LoRA, When you apply it on anime models, the realistic environment still can hint the model to generate realistic human if your model contains/mixed realistic human dataset. Try to lower this LoRA strength if this happens. Or higher 2D style weight. The final effect on characters heavily depends on the "subconscious" of your base model .

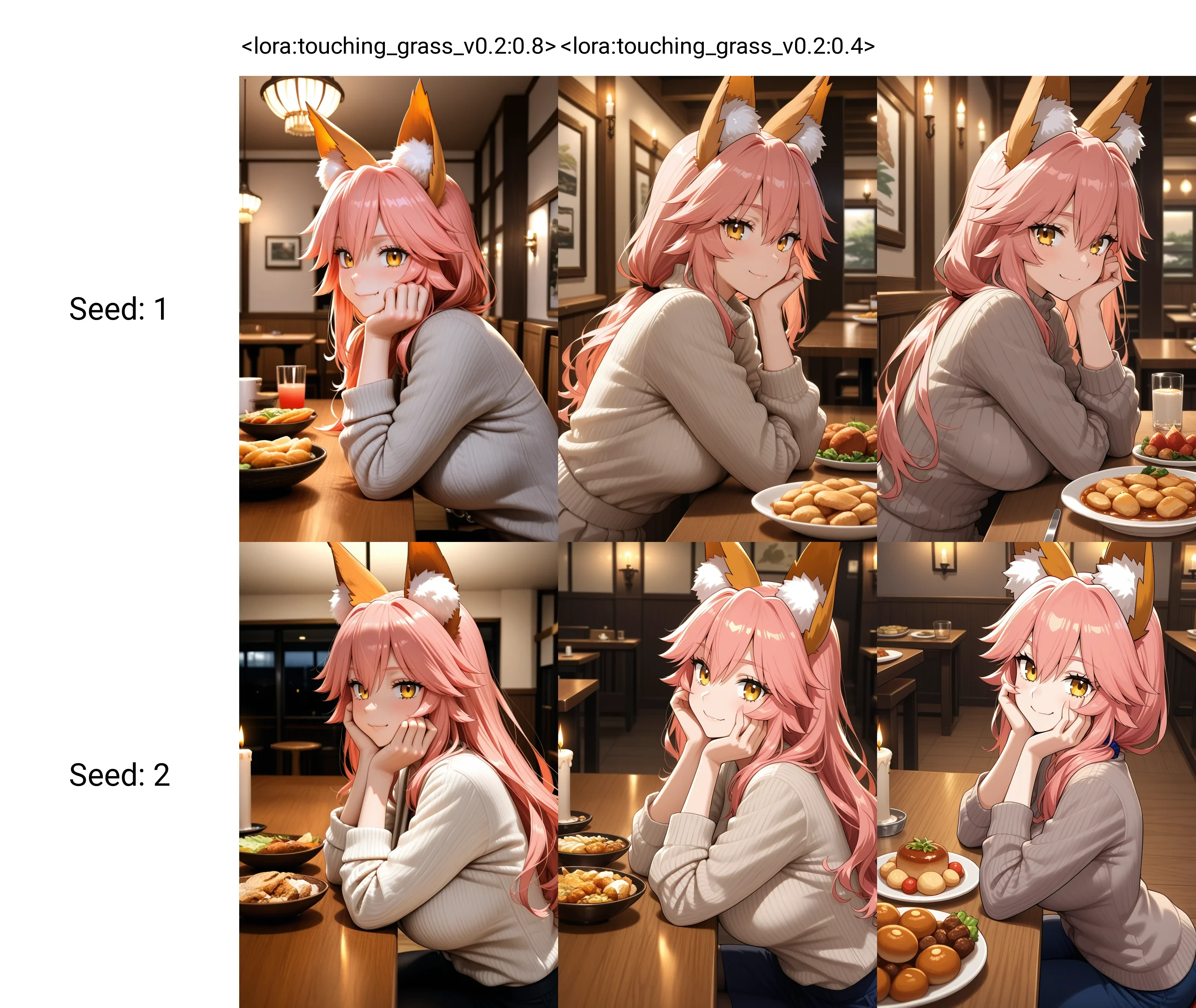

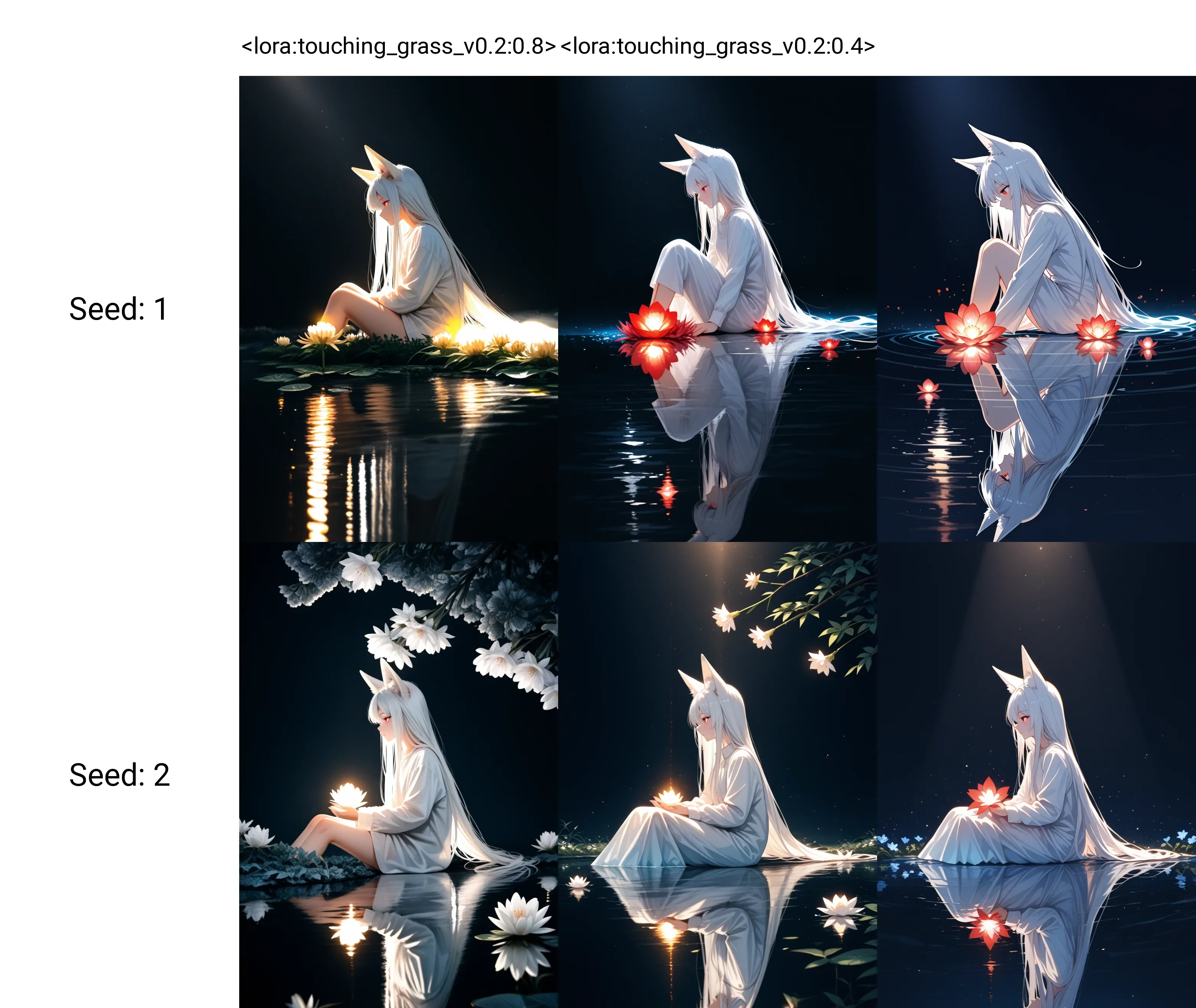

Some quick comparations on Wai v13. Full images are in the cover section.

Better lighting, shadows, background, overall texture.

RTX ON Better campfire, ground, grass.

Better campfire, ground, grass.

Share merges using this LoRA is prohibited. FYI, there are hidden trigger words to print invisible watermark. It works well even if the merge strength is 0.05. I coded the watermark and detector myself. I don't want to use it, but I can.