PLEASE NOTE - ALL MY CELEBRITY LORAS WILL BE DELETED (BY MYSELF) BY THE END OF JUNE 2024.

April 2024 - New Version

A new LoRA trained from scratch using triple-training, featuring much synthetic data.

This new model can do much more than the old one.

Requires a LoRA strength of 0.15.

Same trigger as before: sybildanning woman

Trained with 177 images, including synthetic material.

Very likely to produce ????? material.

OLD VERSION/TEXT:

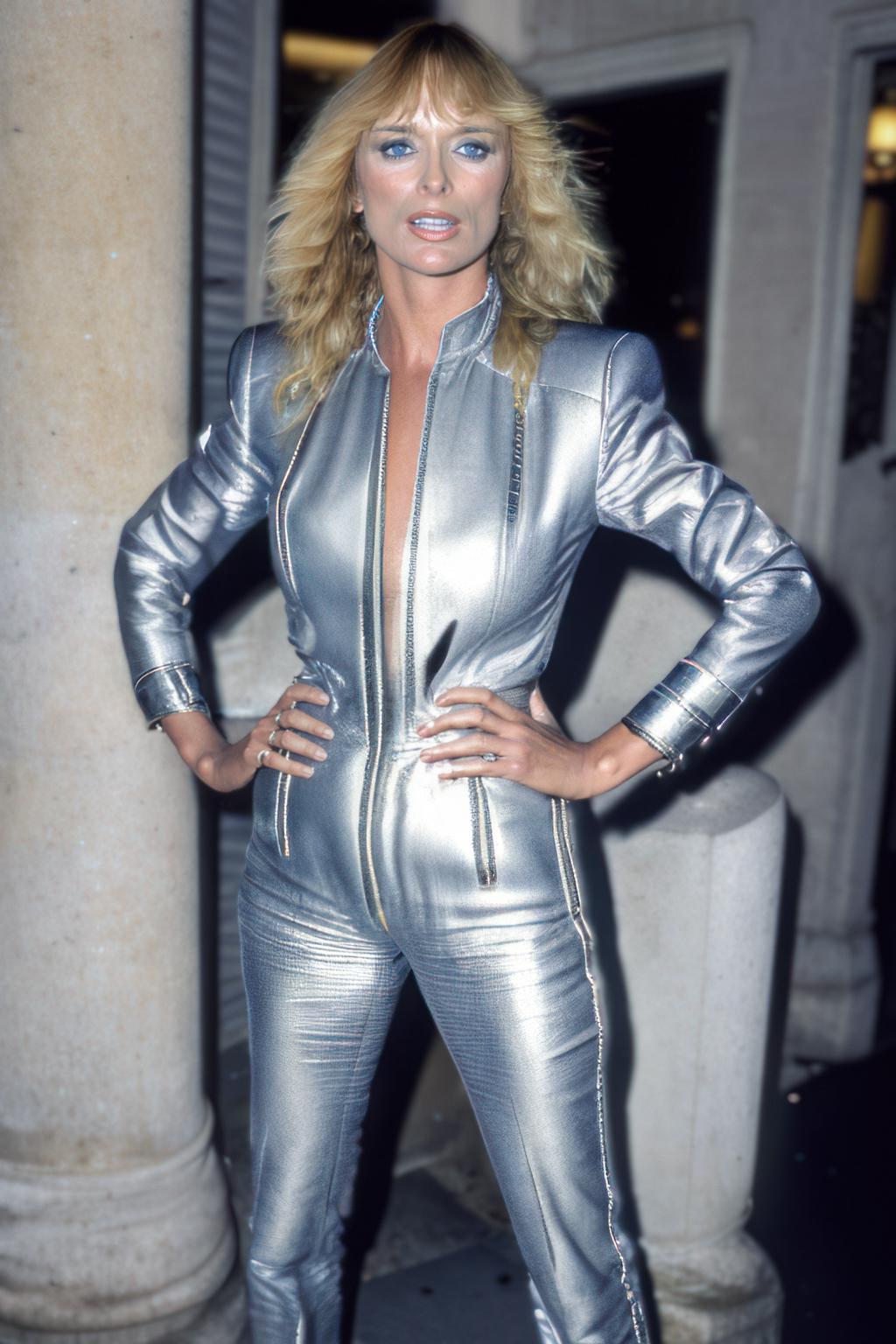

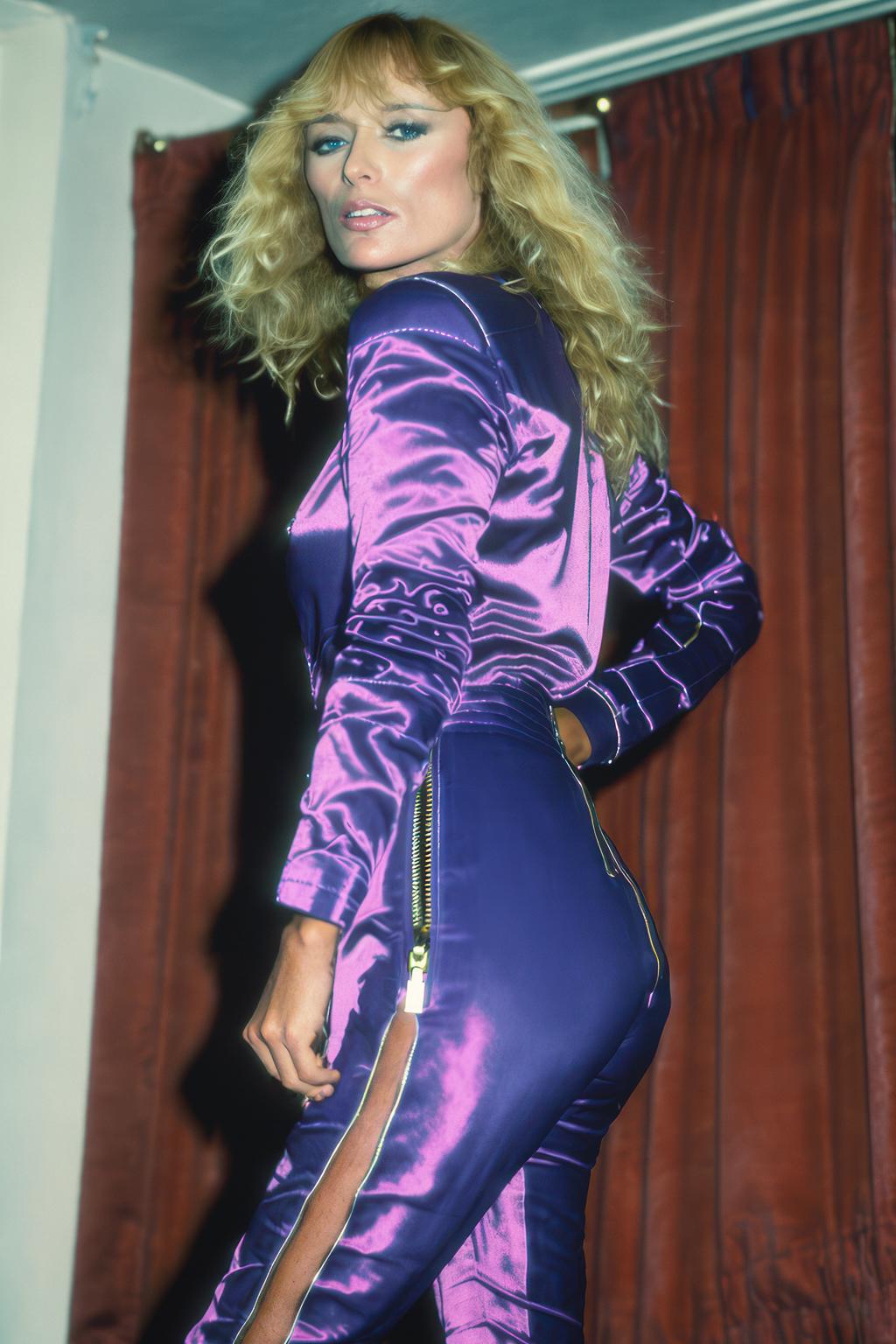

LoRA of Austrian/US cult actress Sybil Danning, whose career high fell in the early part of the 1980s, when a string of cult hits such as Battle Beyond The Stars led to a memorable feature in Playboy in 1983, after having spent nearly the entire 1970s in low-budget European movies.

Uses 76 ref images and ???? (real) reg images, trained for 20,000 steps at a batch size of 1, using BF16 on a 3090, with a training time of four hours. 20 repeats, 4 epochs.

The data for this model is pretty much unrelated to what I used for my previous Sybil Danning checkpoint DreamBooth model, and was heavily curated and manually annotated (with some additional annotations from WD14).

This LoRA is far more flexible and disentangled than that earlier version. Keep the CFG fair-moderately low, and keep the sampling steps very low (20 will give good results when playing around with new poses, though you'll want to turn it up for inpainting sometimes). A LoRA strength of 1 is fine for this model.

I generally use these models in complex workflows, inpainting faces after initial T2Is, and using ControlNet extensively. So if you're hoping for one-click prompt magic, my models aren't data-curated with this in mind, but rather as tools for traditional workflows that use Photoshop and other older methods.

This model is very likely to produce ????? renderings unless counter-prompted.

Description

Trained from scratch on 76 annotated and highly curated images, using BF16 on a 3090, in Kohya, for 5 hours. Twenty repeats, four epochs. Quite flexible and disentangled, but don't expect miracles, because the data was really old.