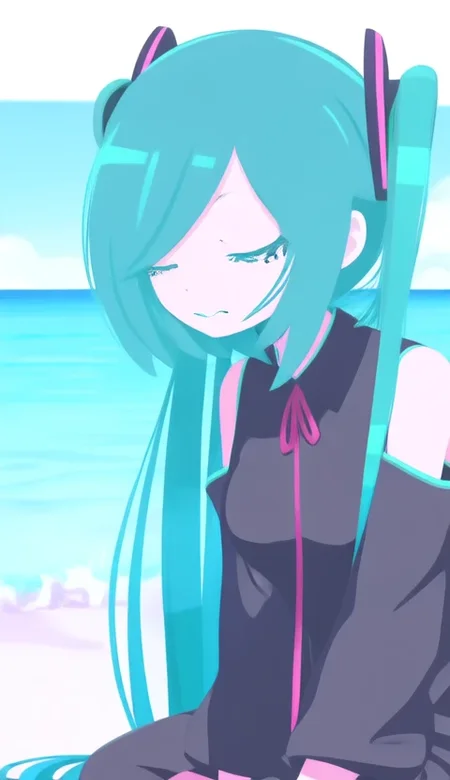

Flat Color - Style

Trained on images without visible lineart, flat colors, and little to no indication of depth.

ℹ️ LoRA work best when applied to the base models on which they are trained. Please read the About This Version on the appropriate base models and workflow/training information.

This is a small style LoRA I thought would be interesting to try with a v-pred model (noobai v-pred), for the reduced color bleeding and strong blacks in particular.

The effect is quite nice and easy to evaluate in training, so I've extended the dataset with videos in following versions for text-to-video models like Wan and Hunyuan, and it is what I am generally using to test LoRA training on new models now.

Recommended prompt structure:

Positive prompt:

flat color, no lineart, blending, negative space,

{{tags}}

masterpiece, best quality, very aesthetic, newestDescription

[WAN 1.3B] LoRA

Trained with diffusion-pipe on Wan2.1-T2V-1.3B

Increased video training resolution to 512

Lowered video FPS to 16

Updated frame_buckets to match video frame counts

Seems usable for both text-to-video and image-to-video workflows with Wan

Text to Video previews generated with ComfyUI_examples/wan/#text-to-video

Loading the LoRA with LoraLoaderModelOnly node and using the fp16 1.3B wan2.1_t2v_1.3B_fp16.safetensors

Image to Video previews generated with ComfyUI_examples/wan/#image-to-video

Using image generations from the IL/Noobai versions of this model card

dataset.toml

# Resolution settings.

resolutions = [512]

# Aspect ratio bucketing settings

enable_ar_bucket = true

min_ar = 0.5

max_ar = 2.0

num_ar_buckets = 7

# Frame buckets (1 is for images)

frame_buckets = [1]

[[directory]] # IMAGES

# Path to the directory containing images and their corresponding caption files.

path = '/mnt/d/huanvideo/training_data/images'

num_repeats = 5

resolutions = [720]

frame_buckets = [1] # Use 1 frame for images.

[[directory]] # VIDEOS

# Path to the directory containing videos and their corresponding caption files.

path = '/mnt/d/huanvideo/training_data/videos'

num_repeats = 5

resolutions = [512] # Set video resolution

frame_buckets = [28, 31, 32, 36, 42, 43, 48, 50, 53]config.toml

# Dataset config file.

output_dir = '/mnt/d/wan/training_output'

dataset = 'dataset.toml'

# Training settings

epochs = 50

micro_batch_size_per_gpu = 1

pipeline_stages = 1

gradient_accumulation_steps = 4

gradient_clipping = 1.0

warmup_steps = 100

# eval settings

eval_every_n_epochs = 5

eval_before_first_step = true

eval_micro_batch_size_per_gpu = 1

eval_gradient_accumulation_steps = 1

# misc settings

save_every_n_epochs = 5

checkpoint_every_n_minutes = 30

activation_checkpointing = true

partition_method = 'parameters'

save_dtype = 'bfloat16'

caching_batch_size = 1

steps_per_print = 1

video_clip_mode = 'single_middle'

[model]

type = 'wan'

ckpt_path = '../Wan2.1-T2V-1.3B'

dtype = 'bfloat16'

# You can use fp8 for the transformer when training LoRA.

transformer_dtype = 'float8'

timestep_sample_method = 'logit_normal'

[adapter]

type = 'lora'

rank = 32

dtype = 'bfloat16'

[optimizer]

type = 'adamw_optimi'

lr = 5e-5

betas = [0.9, 0.99]

weight_decay = 0.02

eps = 1e-8