(This is my first workflow and first upload, so i apologize in advance for the mess of nodes used)

Based on Original Workflow from Lovis Odin: https://github.com/lovisdotio/workflow-comfyui-single-image-to-lora-flux

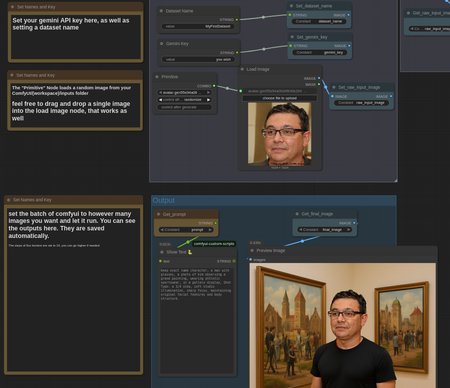

This ComfyUI workflow turns one (or multiple) images into many more images for data set generation, which then can be used for fine tuning.

You simply leave images in the "inputs" folder, a random one is selected, gemini is asked to analyze it and come up with prompts, then one random prompt is selected and flux context is used to make a new image based on the input image. Node for loading a GGUF of flux context is added for my fellow VRAM poor friends.

I had a great deal of pain with getting the package "comfyui_fill-nodes" running that contains the "ask gemini for text" node, what helped me in the end is to edit the __init__.py file in the folder and delete all lines that are not "Gemini" related. If you are aware of a simpler node package for gemini (or any LLM) access please let me know!