FlashAttention VS Test

Test and Graphs attentions based on your system.

TO DO ADD Sage Attention

Comfy UI Users I recommend:

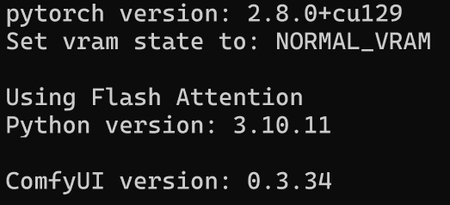

cmd /k python main.py --fp32-text-enc --fp32-vae --bf16-unet --use-flash-attentionNote: The compiled version of flash attention also included is for Cuda 12.9

(Tested Working with COMFY UI)