IMPORTANT UPDATE:

I will be discontinuing work on this upscaler for now as a hires fix is not feasible for SDXL at this point in time. Let me try to explain why I think that is from my limited understanding.

The two-model setup that SDXL uses has the base model is good at generating original images from 100% noise, and the refiner is good at adding detail at 0.35%~ noise left of the image generation. The refiner is although only good at refining noise from an original image still left in creation, and will give you a blurry result if you try to add in random noise to an existing image for it to interpret. This makes it pretty useless as a hires fix.

The base model actually works better at hires upscaling but it can't add much detail, so you will always be left with less finer details than the original image. Some of the 1.5 checkpoints nowadays are extremely good at fine details without needing a secondary model, which is why my 3.0 setup works much better with SD1.5 checkpoints. Although they still arent as good as SDXL around 1024~ pixels at finer details of course.

Some possible alternatives:

Upscaling an 1024x1024 image with ESRGAN works extremely well, but will still leave you with blurry images for non-close up photos.

Since the basemodel+refiner works extremely well at generating images from 100% noise, it might be extremely good at in-painting faces. You can use a face mask detector to automatically do so. This solves most of the reason why you would even use a hires fix in the first place, which is blurry faces from full body shots. Although, I'm not sure if SDXL 0.9 is able to be used for in-painting currently.

The base model could potentially be trained to add in detail without the use of the refiner model, but I'm not sure how great of an idea that is.

SDXL is still left to be released and someone might come up with some solutions to these issues that I'm not able to as of right now. But I want to make it clear so everyone doesn't end up wasting tons of effort trying to get it to work as good as SD1.5 with no avail.

I will be leaving the workflow up for research purposes and because it still works better than anything for SD1.5 models.

___________________________

Found my upscaler useful? Support me on ko-fi https://ko-fi.com/bericbone

You nees to load these encoders into dual clip in the right order. Put them in your clip folder and reliad comfyui.

https://huggingface.co/stabilityai/stable-diffusion-xl-base-0.9/tree/main/text_encoder

https://huggingface.co/stabilityai/stable-diffusion-xl-refiner-0.9/tree/main/text_encoder_2

ComfyUI custom nodes needed to use this:

I recommend installing them with: https://civarchive.com/models/71980?modelVersionId=106402

https://civarchive.com/models/20793/was-node-suite-comfyui

https://civarchive.com/models/33192/comfyui-impact-pack

https://civarchive.com/models/32342/efficiency-nodes-for-comfyui

https://civarchive.com/models/21558/comfyui-derfuu-math-and-modded-nodes

https://civarchive.com/models/87609

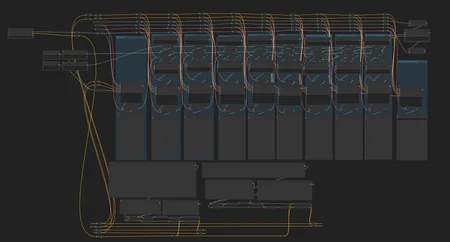

An upscaling method I've designed that upscales in smaller chunks untill the full resolution is reached, as well as an option to add different prompts for the initial image generation and the upscaler.

The idea is to gradually reinterpret the data as the original image gets upscaled, making for better hand/finger structure and facial clarity for even full-body compositions, as well as extremely detailed skin.

This version is optimized for 8gb of VRAM. If the image will not fully render at 8gb VRAM, try bypassing a few of the last upscalers. If you have a lot of VRAM to work with, try adding in another 0.5 upscaler as the first upscaler. Differnent models can require very different denoise strength, so be sure to adjust those aswell. There are preview images from each upscaling step, so you can see where the denoising needs adjustment. If you want to generate images faster, make sure to unplug the latent cables from the VAE decoders before they go into the image previewers.

For those with lower VRAM, try enabling the tiled VAE and replacing the last VAE decoder with a Tiled VAE decoder. This can also allow you to do even higher resolutions, but from my experience, it comes at a loss of color accuracy. The more tiled VAE decoders, the more loss in color accuracy. There's still some color accuracy loss even for the regular VAE decoding, so if you wanna use as many as I do is up to your preferences and the checkpoints you work with. You can reduce this by using fewer upscalers.

The detail refinement step needs a very low denoise strength. try not to go above 0.2. Might need as low as 0.03 or lower. This step is to add a layer of noise that makes skin look less plastic, and to add clarity.

There's some logic behind why the scaling factors are gradually decreasing which I won't go into it too much. basically, the lower scale factor the more smaller details are being worked on in relation to the denoise strength.

If you want to lower the schedulers from karras back to normal, please be aware that you need to reduce the denoise strength drastically. different schedulers applies noise differently.

if you get red missing nodes error:

Navigate to your was-node-suite-comfyui folder

Run with powershell path/to/ComfUI/python_embeded/python.exe -m pip install -r requirements.txt

(replace the python.exe path with your own comfyui path)

ESRGAN (HIGHLY RECOMMENDED! Others might give artifacts!):

https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.5.0/realesr-general-wdn-x4v3.pth

VAE: https://huggingface.co/stabilityai/sd-vae-ft-mse-original/tree/main

Description

Changed upscaling method for more clarity and refinement.

Warning: The setup will no longer prompt you when encoders/decoders gets tiled, so be sure to keep and eye on the previews and make sure you don't get any "Warning: Ran out of memory when regular VAE decoding, retrying with tiled VAE decoding" in your console to avoid color degradation.