Description:

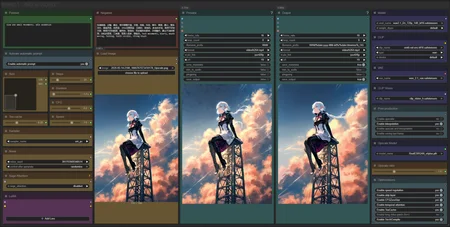

This workflow allows you to generate video from a base image and a text.

You will find a step-by-step guide to using this workflow here: link

My other workflows for WAN: link

Resources you need:

📂Files :

For base version

I2V Model : 480p or 720p

In models/diffusion_models

For GGUF version

I2V Quant Model :

- 720p : Q8, Q5, Q3

- 480p : Q8, Q5, Q3

In models/unet

Common files :

CLIP: umt5_xxl_fp8_e4m3fn_scaled.safetensors

in models/clip

CLIP-VISION: clip_vision_h.safetensors

in models/clip_vision

VAE: wan_2.1_vae.safetensors

in models/vae

Speed LoRA: 480p, 720p

in models/loras

ANY upscale model:

Realistic : RealESRGAN_x4plus.pth

Anime : RealESRGAN_x4plus_anime_6B.pth

in models/upscale_models

📦Custom Nodes :

Description

Interface adjustment :

The frames slider is replaced by a duration slider in seconds,

removed the interpolation ratio slider,

All models files are now in the main window.

Backend :

reduction of the number of custom nodes from 12 to 8,

improvement of the automatic prompt function with the replacement of 8 words like "image" or "drawing" by video to avoid making static videos,

added clip loader in GGUF version.

New "Post-production" menu.

Rollback on native upscaler.

New model optimisation :

Temporal attention for improve spatiotemporal predictive.

RifleXRoPE reduce bugs on videos longer than 5s. This allows you to increase the maximum video length from 5s to 8s.

More detail here : link